commit

f2d612d5f3

11 changed files with 525 additions and 0 deletions

Unified View

Diff Options

-

+5 -0.gitignore

-

+62 -0encode_faces.py

-

BINencodings.pickle

-

BINexamples/example_01.png

-

BINexamples/example_02.png

-

BINexamples/example_03.png

-

+79 -0recognize_faces_image.py

-

+133 -0recognize_faces_video.py

-

+132 -0recognize_faces_video_file.py

-

+114 -0search_bing_api.py

-

BINtest.pickle

+ 5

- 0

.gitignore

View File

| @ -0,0 +1,5 @@ | |||||

| videos/ | |||||

| output/ | |||||

| dataset/* | |||||

| new_dataset/* | |||||

| */pickle | |||||

+ 62

- 0

encode_faces.py

View File

| @ -0,0 +1,62 @@ | |||||

| # USAGE | |||||

| # python encode_faces.py --dataset dataset --encodings encodings.pickle | |||||

| # import the necessary packages | |||||

| from imutils import paths | |||||

| import face_recognition | |||||

| import argparse | |||||

| import pickle | |||||

| import cv2 | |||||

| import os | |||||

| # construct the argument parser and parse the arguments | |||||

| ap = argparse.ArgumentParser() | |||||

| ap.add_argument("-i", "--dataset", required=True, | |||||

| help="path to input directory of faces + images") | |||||

| ap.add_argument("-e", "--encodings", required=True, | |||||

| help="path to serialized db of facial encodings") | |||||

| ap.add_argument("-d", "--detection-method", type=str, default="cnn", | |||||

| help="face detection model to use: either `hog` or `cnn`") | |||||

| args = vars(ap.parse_args()) | |||||

| # grab the paths to the input images in our dataset | |||||

| print("[INFO] quantifying faces...") | |||||

| imagePaths = list(paths.list_images(args["dataset"])) | |||||

| # initialize the list of known encodings and known names | |||||

| knownEncodings = [] | |||||

| knownNames = [] | |||||

| # loop over the image paths | |||||

| for (i, imagePath) in enumerate(imagePaths): | |||||

| # extract the person name from the image path | |||||

| print("[INFO] processing image {}/{}".format(i + 1, | |||||

| len(imagePaths))) | |||||

| name = imagePath.split(os.path.sep)[-2] | |||||

| # load the input image and convert it from RGB (OpenCV ordering) | |||||

| # to dlib ordering (RGB) | |||||

| image = cv2.imread(imagePath) | |||||

| rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) | |||||

| # detect the (x, y)-coordinates of the bounding boxes | |||||

| # corresponding to each face in the input image | |||||

| boxes = face_recognition.face_locations(rgb, | |||||

| model=args["detection_method"]) | |||||

| # compute the facial embedding for the face | |||||

| encodings = face_recognition.face_encodings(rgb, boxes) | |||||

| # loop over the encodings | |||||

| for encoding in encodings: | |||||

| # add each encoding + name to our set of known names and | |||||

| # encodings | |||||

| knownEncodings.append(encoding) | |||||

| knownNames.append(name) | |||||

| # dump the facial encodings + names to disk | |||||

| print("[INFO] serializing encodings...") | |||||

| data = {"encodings": knownEncodings, "names": knownNames} | |||||

| f = open(args["encodings"], "wb") | |||||

| f.write(pickle.dumps(data)) | |||||

| f.close() | |||||

BIN

encodings.pickle

View File

BIN

examples/example_01.png

View File

BIN

examples/example_02.png

View File

BIN

examples/example_03.png

View File

+ 79

- 0

recognize_faces_image.py

View File

| @ -0,0 +1,79 @@ | |||||

| # USAGE | |||||

| # python recognize_faces_image.py --encodings encodings.pickle --image examples/example_01.png | |||||

| # import the necessary packages | |||||

| import face_recognition | |||||

| import argparse | |||||

| import pickle | |||||

| import cv2 | |||||

| # construct the argument parser and parse the arguments | |||||

| ap = argparse.ArgumentParser() | |||||

| ap.add_argument("-e", "--encodings", required=True, | |||||

| help="path to serialized db of facial encodings") | |||||

| ap.add_argument("-i", "--image", required=True, | |||||

| help="path to input image") | |||||

| ap.add_argument("-d", "--detection-method", type=str, default="cnn", | |||||

| help="face detection model to use: either `hog` or `cnn`") | |||||

| args = vars(ap.parse_args()) | |||||

| # load the known faces and embeddings | |||||

| print("[INFO] loading encodings...") | |||||

| data = pickle.loads(open(args["encodings"], "rb").read()) | |||||

| # load the input image and convert it from BGR to RGB | |||||

| image = cv2.imread(args["image"]) | |||||

| rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) | |||||

| # detect the (x, y)-coordinates of the bounding boxes corresponding | |||||

| # to each face in the input image, then compute the facial embeddings | |||||

| # for each face | |||||

| print("[INFO] recognizing faces...") | |||||

| boxes = face_recognition.face_locations(rgb, | |||||

| model=args["detection_method"]) | |||||

| encodings = face_recognition.face_encodings(rgb, boxes) | |||||

| # initialize the list of names for each face detected | |||||

| names = [] | |||||

| # loop over the facial embeddings | |||||

| for encoding in encodings: | |||||

| # attempt to match each face in the input image to our known | |||||

| # encodings | |||||

| matches = face_recognition.compare_faces(data["encodings"], | |||||

| encoding) | |||||

| name = "Unknown" | |||||

| # check to see if we have found a match | |||||

| if True in matches: | |||||

| # find the indexes of all matched faces then initialize a | |||||

| # dictionary to count the total number of times each face | |||||

| # was matched | |||||

| matchedIdxs = [i for (i, b) in enumerate(matches) if b] | |||||

| counts = {} | |||||

| # loop over the matched indexes and maintain a count for | |||||

| # each recognized face face | |||||

| for i in matchedIdxs: | |||||

| name = data["names"][i] | |||||

| counts[name] = counts.get(name, 0) + 1 | |||||

| # determine the recognized face with the largest number of | |||||

| # votes (note: in the event of an unlikely tie Python will | |||||

| # select first entry in the dictionary) | |||||

| name = max(counts, key=counts.get) | |||||

| # update the list of names | |||||

| names.append(name) | |||||

| # loop over the recognized faces | |||||

| for ((top, right, bottom, left), name) in zip(boxes, names): | |||||

| # draw the predicted face name on the image | |||||

| cv2.rectangle(image, (left, top), (right, bottom), (0, 255, 0), 2) | |||||

| y = top - 15 if top - 15 > 15 else top + 15 | |||||

| cv2.putText(image, name, (left, y), cv2.FONT_HERSHEY_SIMPLEX, | |||||

| 0.75, (0, 255, 0), 2) | |||||

| # show the output image | |||||

| cv2.imshow("Image", image) | |||||

| cv2.waitKey(0) | |||||

+ 133

- 0

recognize_faces_video.py

View File

| @ -0,0 +1,133 @@ | |||||

| #!/usr/bin/env python3 | |||||

| # USAGE | |||||

| # python recognize_faces_video.py --encodings encodings.pickle | |||||

| # python recognize_faces_video.py --encodings encodings.pickle --output output/jurassic_park_trailer_output.avi --display 0 | |||||

| # import the necessary packages | |||||

| from imutils.video import VideoStream | |||||

| import face_recognition | |||||

| import argparse | |||||

| import imutils | |||||

| import pickle | |||||

| import time | |||||

| import cv2 | |||||

| # construct the argument parser and parse the arguments | |||||

| ap = argparse.ArgumentParser() | |||||

| ap.add_argument("-e", "--encodings", required=True, | |||||

| help="path to serialized db of facial encodings") | |||||

| ap.add_argument("-o", "--output", type=str, | |||||

| help="path to output video") | |||||

| ap.add_argument("-y", "--display", type=int, default=1, | |||||

| help="whether or not to display output frame to screen") | |||||

| ap.add_argument("-d", "--detection-method", type=str, default="cnn", | |||||

| help="face detection model to use: either `hog` or `cnn`") | |||||

| args = vars(ap.parse_args()) | |||||

| # load the known faces and embeddings | |||||

| print("[INFO] loading encodings...") | |||||

| data = pickle.loads(open(args["encodings"], "rb").read()) | |||||

| # initialize the video stream and pointer to output video file, then | |||||

| # allow the camera sensor to warm up | |||||

| print("[INFO] starting video stream...") | |||||

| vs = VideoStream(src=0).start() | |||||

| writer = None | |||||

| time.sleep(2.0) | |||||

| # loop over frames from the video file stream | |||||

| while True: | |||||

| try: | |||||

| # grab the frame from the threaded video stream | |||||

| frame = vs.read() | |||||

| # convert the input frame from BGR to RGB then resize it to have | |||||

| # a width of 750px (to speedup processing) | |||||

| rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) | |||||

| rgb = imutils.resize(frame, width=750) | |||||

| r = frame.shape[1] / float(rgb.shape[1]) | |||||

| # detect the (x, y)-coordinates of the bounding boxes | |||||

| # corresponding to each face in the input frame, then compute | |||||

| # the facial embeddings for each face | |||||

| boxes = face_recognition.face_locations(rgb, | |||||

| model=args["detection_method"]) | |||||

| encodings = face_recognition.face_encodings(rgb, boxes) | |||||

| names = [] | |||||

| # loop over the facial embeddings | |||||

| for encoding in encodings: | |||||

| # attempt to match each face in the input image to our known | |||||

| # encodings | |||||

| matches = face_recognition.compare_faces(data["encodings"], | |||||

| encoding) | |||||

| name = "Unknown" | |||||

| # check to see if we have found a match | |||||

| if True in matches: | |||||

| # find the indexes of all matched faces then initialize a | |||||

| # dictionary to count the total number of times each face | |||||

| # was matched | |||||

| matchedIdxs = [i for (i, b) in enumerate(matches) if b] | |||||

| counts = {} | |||||

| # loop over the matched indexes and maintain a count for | |||||

| # each recognized face face | |||||

| for i in matchedIdxs: | |||||

| name = data["names"][i] | |||||

| counts[name] = counts.get(name, 0) + 1 | |||||

| # determine the recognized face with the largest number | |||||

| # of votes (note: in the event of an unlikely tie Python | |||||

| # will select first entry in the dictionary) | |||||

| name = max(counts, key=counts.get) | |||||

| # update the list of names | |||||

| names.append(name) | |||||

| # loop over the recognized faces | |||||

| for ((top, right, bottom, left), name) in zip(boxes, names): | |||||

| # rescale the face coordinates | |||||

| top = int(top * r) | |||||

| right = int(right * r) | |||||

| bottom = int(bottom * r) | |||||

| left = int(left * r) | |||||

| # draw the predicted face name on the image | |||||

| cv2.rectangle(frame, (left, top), (right, bottom), | |||||

| (0, 255, 0), 2) | |||||

| y = top - 15 if top - 15 > 15 else top + 15 | |||||

| cv2.putText(frame, name, (left, y), cv2.FONT_HERSHEY_SIMPLEX, | |||||

| 0.75, (0, 255, 0), 2) | |||||

| # if the video writer is None *AND* we are supposed to write | |||||

| # the output video to disk initialize the writer | |||||

| if writer is None and args["output"] is not None: | |||||

| fourcc = cv2.VideoWriter_fourcc(*"MJPG") | |||||

| writer = cv2.VideoWriter(args["output"], fourcc, 20, | |||||

| (frame.shape[1], frame.shape[0]), True) | |||||

| # if the writer is not None, write the frame with recognized | |||||

| # faces t odisk | |||||

| if writer is not None: | |||||

| writer.write(frame) | |||||

| # check to see if we are supposed to display the output frame to | |||||

| # the screen | |||||

| if args["display"] > 0: | |||||

| cv2.imshow("Frame", frame) | |||||

| key = cv2.waitKey(1) & 0xFF | |||||

| # if the `q` key was pressed, break from the loop | |||||

| if key == ord("q"): | |||||

| break | |||||

| except KeyboardInterrupt: | |||||

| break | |||||

| # do a bit of cleanup | |||||

| cv2.destroyAllWindows() | |||||

| vs.stop() | |||||

| # check to see if the video writer point needs to be released | |||||

| if writer is not None: | |||||

| writer.release() | |||||

+ 132

- 0

recognize_faces_video_file.py

View File

| @ -0,0 +1,132 @@ | |||||

| # USAGE | |||||

| # python recognize_faces_video_file.py --encodings encodings.pickle --input videos/lunch_scene.mp4 | |||||

| # python recognize_faces_video_file.py --encodings encodings.pickle --input videos/lunch_scene.mp4 --output output/lunch_scene_output.avi --display 0 | |||||

| # import the necessary packages | |||||

| import face_recognition | |||||

| import argparse | |||||

| import imutils | |||||

| import pickle | |||||

| import time | |||||

| import cv2 | |||||

| # construct the argument parser and parse the arguments | |||||

| ap = argparse.ArgumentParser() | |||||

| ap.add_argument("-e", "--encodings", required=True, | |||||

| help="path to serialized db of facial encodings") | |||||

| ap.add_argument("-i", "--input", required=True, | |||||

| help="path to input video") | |||||

| ap.add_argument("-o", "--output", type=str, | |||||

| help="path to output video") | |||||

| ap.add_argument("-y", "--display", type=int, default=1, | |||||

| help="whether or not to display output frame to screen") | |||||

| ap.add_argument("-d", "--detection-method", type=str, default="cnn", | |||||

| help="face detection model to use: either `hog` or `cnn`") | |||||

| args = vars(ap.parse_args()) | |||||

| # load the known faces and embeddings | |||||

| print("[INFO] loading encodings...") | |||||

| data = pickle.loads(open(args["encodings"], "rb").read()) | |||||

| # initialize the pointer to the video file and the video writer | |||||

| print("[INFO] processing video...") | |||||

| stream = cv2.VideoCapture(args["input"]) | |||||

| writer = None | |||||

| # loop over frames from the video file stream | |||||

| while True: | |||||

| # grab the next frame | |||||

| (grabbed, frame) = stream.read() | |||||

| # if the frame was not grabbed, then we have reached the | |||||

| # end of the stream | |||||

| if not grabbed: | |||||

| break | |||||

| # convert the input frame from BGR to RGB then resize it to have | |||||

| # a width of 750px (to speedup processing) | |||||

| rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) | |||||

| rgb = imutils.resize(frame, width=750) | |||||

| r = frame.shape[1] / float(rgb.shape[1]) | |||||

| # detect the (x, y)-coordinates of the bounding boxes | |||||

| # corresponding to each face in the input frame, then compute | |||||

| # the facial embeddings for each face | |||||

| boxes = face_recognition.face_locations(rgb, | |||||

| model=args["detection_method"]) | |||||

| encodings = face_recognition.face_encodings(rgb, boxes) | |||||

| names = [] | |||||

| # loop over the facial embeddings | |||||

| for encoding in encodings: | |||||

| # attempt to match each face in the input image to our known | |||||

| # encodings | |||||

| matches = face_recognition.compare_faces(data["encodings"], | |||||

| encoding) | |||||

| name = "Unknown" | |||||

| # check to see if we have found a match | |||||

| if True in matches: | |||||

| # find the indexes of all matched faces then initialize a | |||||

| # dictionary to count the total number of times each face | |||||

| # was matched | |||||

| matchedIdxs = [i for (i, b) in enumerate(matches) if b] | |||||

| counts = {} | |||||

| # loop over the matched indexes and maintain a count for | |||||

| # each recognized face face | |||||

| for i in matchedIdxs: | |||||

| name = data["names"][i] | |||||

| counts[name] = counts.get(name, 0) + 1 | |||||

| # determine the recognized face with the largest number | |||||

| # of votes (note: in the event of an unlikely tie Python | |||||

| # will select first entry in the dictionary) | |||||

| name = max(counts, key=counts.get) | |||||

| # update the list of names | |||||

| names.append(name) | |||||

| # loop over the recognized faces | |||||

| for ((top, right, bottom, left), name) in zip(boxes, names): | |||||

| # rescale the face coordinates | |||||

| top = int(top * r) | |||||

| right = int(right * r) | |||||

| bottom = int(bottom * r) | |||||

| left = int(left * r) | |||||

| # draw the predicted face name on the image | |||||

| cv2.rectangle(frame, (left, top), (right, bottom), | |||||

| (0, 255, 0), 2) | |||||

| y = top - 15 if top - 15 > 15 else top + 15 | |||||

| cv2.putText(frame, name, (left, y), cv2.FONT_HERSHEY_SIMPLEX, | |||||

| 0.75, (0, 255, 0), 2) | |||||

| # if the video writer is None *AND* we are supposed to write | |||||

| # the output video to disk initialize the writer | |||||

| if writer is None and args["output"] is not None: | |||||

| fourcc = cv2.VideoWriter_fourcc(*"MJPG") | |||||

| writer = cv2.VideoWriter(args["output"], fourcc, 24, | |||||

| (frame.shape[1], frame.shape[0]), True) | |||||

| # if the writer is not None, write the frame with recognized | |||||

| # faces t odisk | |||||

| if writer is not None: | |||||

| writer.write(frame) | |||||

| # check to see if we are supposed to display the output frame to | |||||

| # the screen | |||||

| if args["display"] > 0: | |||||

| cv2.imshow("Frame", frame) | |||||

| key = cv2.waitKey(1) & 0xFF | |||||

| # if the `q` key was pressed, break from the loop | |||||

| if key == ord("q"): | |||||

| break | |||||

| # close the video file pointers | |||||

| stream.release() | |||||

| # check to see if the video writer point needs to be released | |||||

| if writer is not None: | |||||

| writer.release() | |||||

+ 114

- 0

search_bing_api.py

View File

| @ -0,0 +1,114 @@ | |||||

| # USAGE | |||||

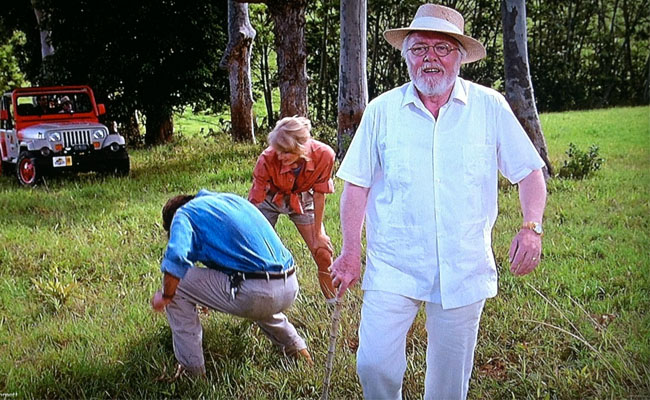

| # python search_bing_api.py --query "alan grant" --output dataset/alan_grant | |||||

| # python search_bing_api.py --query "ian malcolm" --output dataset/ian_malcolm | |||||

| # python search_bing_api.py --query "ellie sattler" --output dataset/ellie_sattler | |||||

| # python search_bing_api.py --query "john hammond jurassic park" --output dataset/john_hammond | |||||

| # python search_bing_api.py --query "owen grady jurassic world" --output dataset/owen_grady | |||||

| # python search_bing_api.py --query "claire dearing jurassic world" --output dataset/claire_dearing | |||||

| # import the necessary packages | |||||

| from requests import exceptions | |||||

| import argparse | |||||

| import requests | |||||

| import cv2 | |||||

| import os | |||||

| # construct the argument parser and parse the arguments | |||||

| ap = argparse.ArgumentParser() | |||||

| ap.add_argument("-q", "--query", required=True, | |||||

| help="search query to search Bing Image API for") | |||||

| ap.add_argument("-o", "--output", required=True, | |||||

| help="path to output directory of images") | |||||

| args = vars(ap.parse_args()) | |||||

| # set your Microsoft Cognitive Services API key along with (1) the | |||||

| # maximum number of results for a given search and (2) the group size | |||||

| # for results (maximum of 50 per request) | |||||

| API_KEY = "INSERT_YOUR_API_KEY_HERE" | |||||

| MAX_RESULTS = 100 | |||||

| GROUP_SIZE = 50 | |||||

| # set the endpoint API URL | |||||

| URL = "https://api.cognitive.microsoft.com/bing/v7.0/images/search" | |||||

| # when attemping to download images from the web both the Python | |||||

| # programming language and the requests library have a number of | |||||

| # exceptions that can be thrown so let's build a list of them now | |||||

| # so we can filter on them | |||||

| EXCEPTIONS = set([IOError, FileNotFoundError, | |||||

| exceptions.RequestException, exceptions.HTTPError, | |||||

| exceptions.ConnectionError, exceptions.Timeout]) | |||||

| # store the search term in a convenience variable then set the | |||||

| # headers and search parameters | |||||

| term = args["query"] | |||||

| headers = {"Ocp-Apim-Subscription-Key" : API_KEY} | |||||

| params = {"q": term, "offset": 0, "count": GROUP_SIZE} | |||||

| # make the search | |||||

| print("[INFO] searching Bing API for '{}'".format(term)) | |||||

| search = requests.get(URL, headers=headers, params=params) | |||||

| search.raise_for_status() | |||||

| # grab the results from the search, including the total number of | |||||

| # estimated results returned by the Bing API | |||||

| results = search.json() | |||||

| estNumResults = min(results["totalEstimatedMatches"], MAX_RESULTS) | |||||

| print("[INFO] {} total results for '{}'".format(estNumResults, | |||||

| term)) | |||||

| # initialize the total number of images downloaded thus far | |||||

| total = 0 | |||||

| # loop over the estimated number of results in `GROUP_SIZE` groups | |||||

| for offset in range(0, estNumResults, GROUP_SIZE): | |||||

| # update the search parameters using the current offset, then | |||||

| # make the request to fetch the results | |||||

| print("[INFO] making request for group {}-{} of {}...".format( | |||||

| offset, offset + GROUP_SIZE, estNumResults)) | |||||

| params["offset"] = offset | |||||

| search = requests.get(URL, headers=headers, params=params) | |||||

| search.raise_for_status() | |||||

| results = search.json() | |||||

| print("[INFO] saving images for group {}-{} of {}...".format( | |||||

| offset, offset + GROUP_SIZE, estNumResults)) | |||||

| # loop over the results | |||||

| for v in results["value"]: | |||||

| # try to download the image | |||||

| try: | |||||

| # make a request to download the image | |||||

| print("[INFO] fetching: {}".format(v["contentUrl"])) | |||||

| r = requests.get(v["contentUrl"], timeout=30) | |||||

| # build the path to the output image | |||||

| ext = v["contentUrl"][v["contentUrl"].rfind("."):] | |||||

| p = os.path.sep.join([args["output"], "{}{}".format( | |||||

| str(total).zfill(8), ext)]) | |||||

| # write the image to disk | |||||

| f = open(p, "wb") | |||||

| f.write(r.content) | |||||

| f.close() | |||||

| # catch any errors that would not unable us to download the | |||||

| # image | |||||

| except Exception as e: | |||||

| # check to see if our exception is in our list of | |||||

| # exceptions to check for | |||||

| if type(e) in EXCEPTIONS: | |||||

| print("[INFO] skipping: {}".format(v["contentUrl"])) | |||||

| continue | |||||

| # try to load the image from disk | |||||

| image = cv2.imread(p) | |||||

| # if the image is `None` then we could not properly load the | |||||

| # image from disk (so it should be ignored) | |||||

| if image is None: | |||||

| print("[INFO] deleting: {}".format(p)) | |||||

| os.remove(p) | |||||

| continue | |||||

| # update the counter | |||||

| total += 1 | |||||